About us

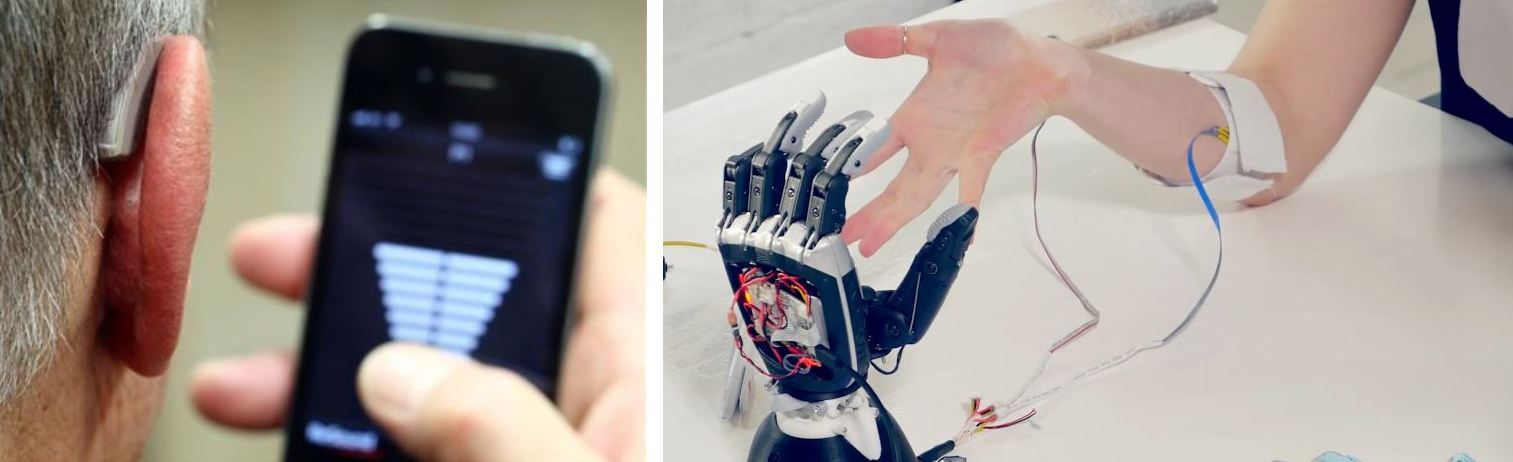

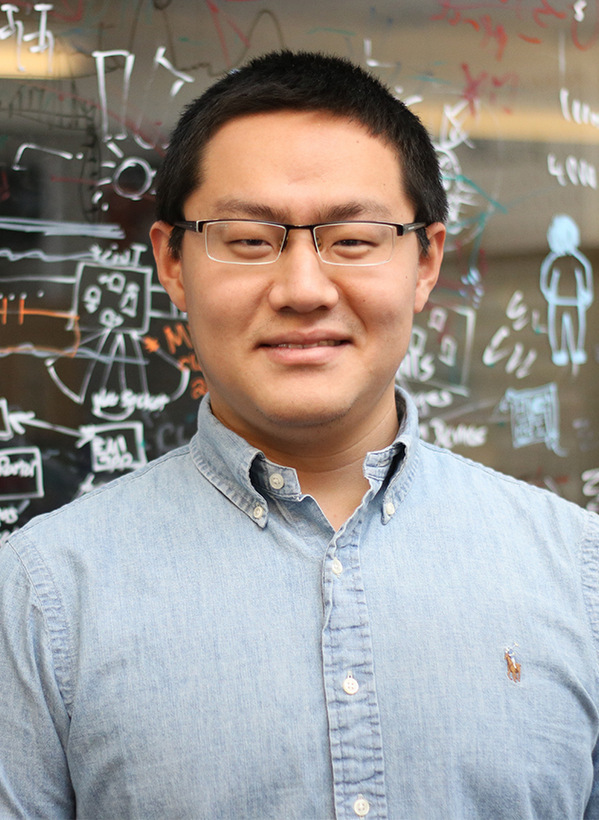

We are an advanced Human-Computer Interaction research lab in the Computer Science and Engineering Department at the University of Michigan. Our mission is to redefine hearing as a programmable, editable, and hyperpersonal experience—not a fixed sense, but a customizable interface between humans and their world—much like how web design lets us program, rearrange, and personalize visual elements.

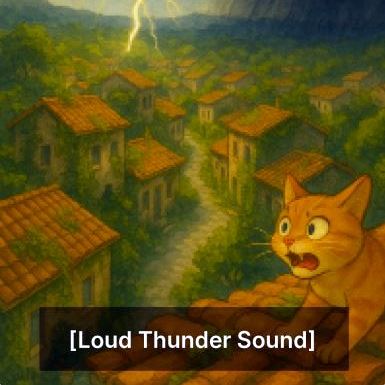

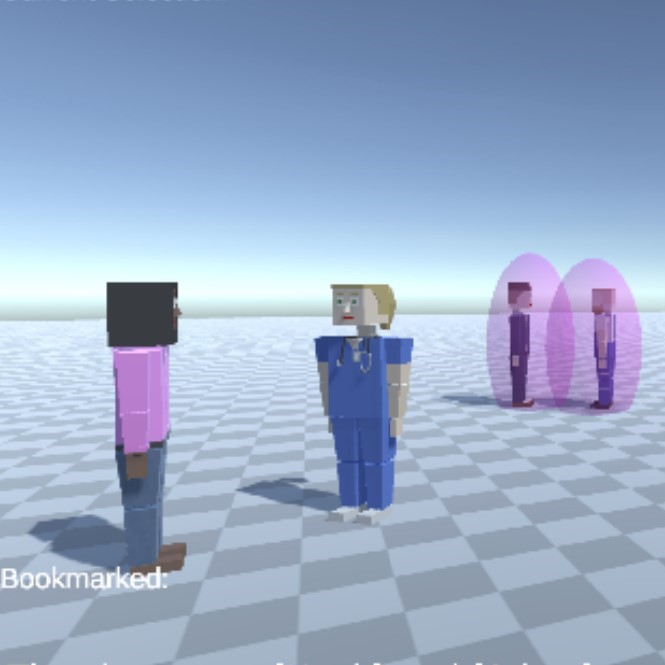

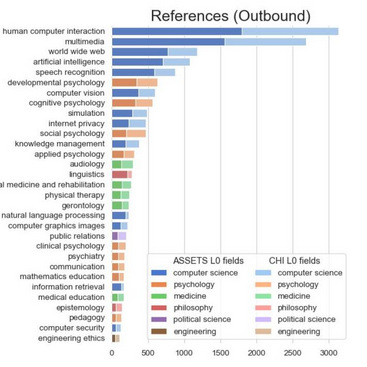

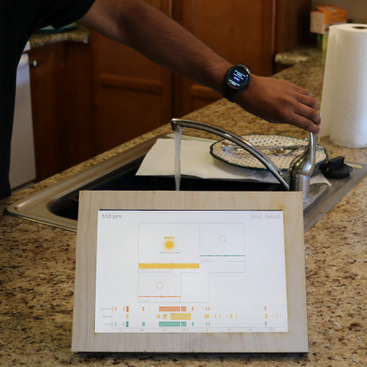

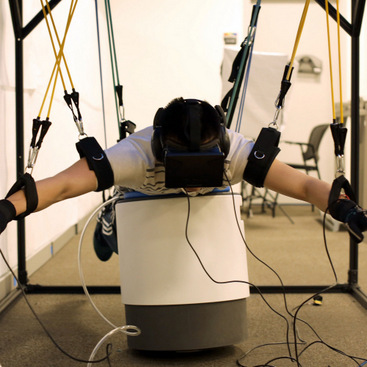

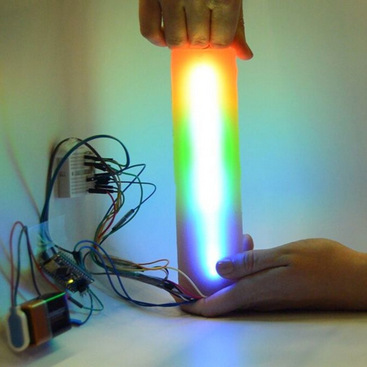

We design human-centered, agentic AI that empowers people to shape how they hear, perceive, and interact with sound. Our research spans accessibility, healthcare, and entertainment domains, with current projects including editable digital media soundscapes, relational audio tools for cross-neurotype communication, and adaptive hearing systems for clinical environments.

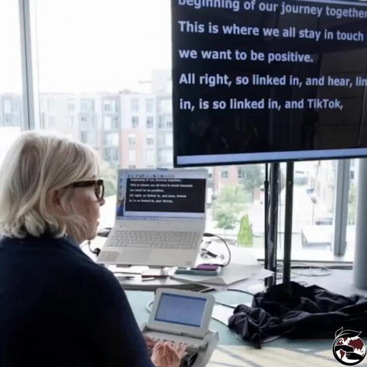

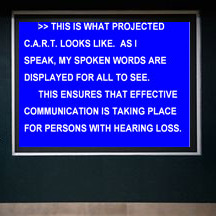

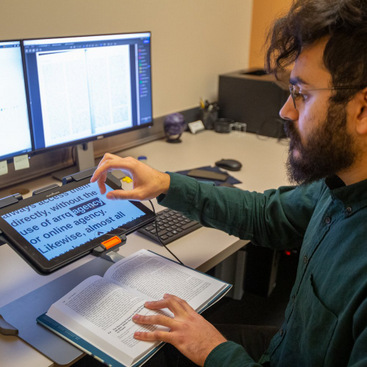

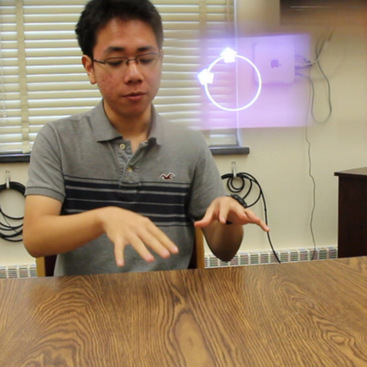

Accessibility is a core driver of our work. We view sound personalization not just as a convenience, but as a powerful way to make sound more inclusive and equitable. This includes developing systems for real-time audio captioning, enabling users to edit or customize captions, and creating tools that translate sound into formats tailored to individual sensory and cognitive needs.

We focus on accessibility because it is the entry point to future interfaces. Communities that rely on captioning, sensory augmentation, or neurodivergent-friendly tools are often the earliest adopters of new technologies. By designing for these users, we uncover what's next for everyone.

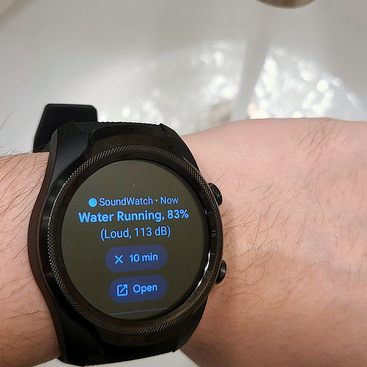

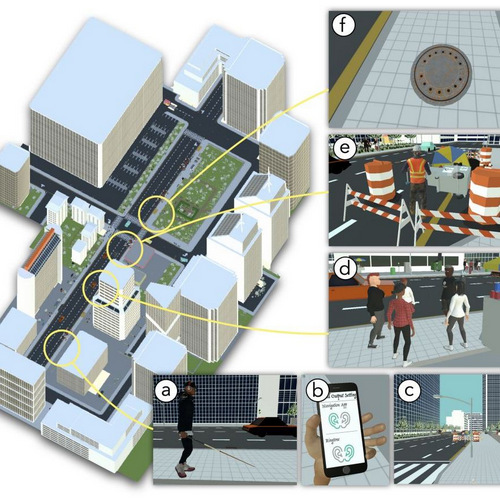

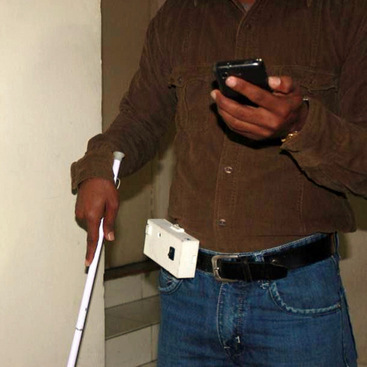

Our work is already making real-world impact. The SoundWatch app for sound awareness on smartwatches has over 4,000 downloads. Our indoor navigation system for visually impaired users has been used more than 100,000 times in museums across India. Our clinical communication tools are being deployed at Michigan Medicine. A feature we pioneered for people with paralysis is now built into every iPhone, and our work has directly influenced real-time captioning tools at Google.

These outcomes are made possible by our lab’s deeply collaborative, community-centered research. Our team includes HCI and AI researchers working with healthcare professionals, neuroscientists, engineers, psychologists, Deaf and disability studies scholars, musicians, designers, and sound artists. We partner closely with community members, end users, and organizations to tackle complex challenges and turn ideas into meaningful, trusted technologies.

Looking forward, we imagine a future of auditory superintelligence—systems that don’t just support hearing, but expand it. These tools will filter, interpret, and reshape soundscapes in real time, adapting to our needs and context. From cognitive hearing aids to sound-based memory support and emotion-aware audio companions, we aim to make hearing a fully customizable experience.

Explore our projects below—and get in touch if you’re interested in working with us.

Recent News

Aug 26: Best paper honorable mention for CARTGPT at ASSETS 2025!

Aug 14: Three demo papers accepted to ASSETS 2025: RAVEN, EvolveCaptions, and CapTune!

Jul 7: Our work on sound personalization for digital media accessibility has been accepted to ICMI 2025!

Jul 3: Three papers on improving DHH accessibility accepted to ASSETS 2025: SoundNarratives, CapTune, and CARTGPT! See you soon in Denver.

Jun 7: Our work on designing an adaptive music system for exercise has been accepted to ISMIR 2025!

Apr 15: We have three new incoming PhD students in Fall 2025. Welcome Sid, Lindy, and Veronica!

Mar 16: Our work on improving communication in the operating rooms has been accepted to the journal of ORL Head and Neck Nursing!

Feb 22: Our initial work on using runtime generative tools to improve accessibility of virtual 3D scenes as been accepted to CHI 20205 LBW!

Feb 19: Our initial work on enchancing communication in high-noise operating rooms has been accepted as a poster at Collaborating Across Borders IX!

Jan 16: Our SoundWeaver system, which weaves multiple sources of sound information to present them accessibly to DHH users, has been accepted to CHI 2025!

Jan 16: Our proposal on dizziness diagnosis was approved for funding from William Demant Foundation! See news article.

Oct 30: Our CARTGPT work received the best poster award at ASSETS!

Oct 11: Soundability lab students are presenting 7 papers, demos, and posters at the upcoming UIST and ASSETS 2024 conferences!

Sep 30: We were awarded the Google Academic Research Award for Leo and Jeremy's project!